Written by Miguel Román | May 2, 2019, updated August 5, 2024

The call drop ratio (CDR) is one of the most important key performance indicators (KPI) that operators monitor constantly. CDR plays an important role in how subscribers perceive network quality.

To measure CDR, operators conduct a drive testing campaign over a specific area, making multiple calls between mobile devices (smartphones) running QualiPoc tests. Considering that very few calls finish with a drop call state, the number of calls would need to be in the range of several thousand to measure CDR with statistical significance.

The need for a very high number of call samples makes CDR measurements unrealistic for smaller areas, for example, shopping malls. Consequently, we end up obtaining one single CDR value per drive testing campaign.

Machine learning use case

Let me explain the difficulties of CDR measurement with a very illustrative analogy. Imagine you are coaching pole vault athletes. Imagine that you are preparing them for the Olympics and want to test whether they can pass a certain height. Will you make them jump a thousand times and measure their success rate?

Obviously not. Instead, you would pay close attention to a small number of jumps and extract meaningful information from these. For example, the margin when they succeed; the state of the bar after they passed; whether they only grazed the bar or tipped it over when they failed, etc.

If we want to apply the same principles to call drop ratio (CDR) calculations, we will need a way to score each call based on how close it was to dropping, regardless of its result. This score would give us an indication of the stability of the call by measuring how close the call is to those calls that end up dropping.

The Call Stability Score

The Call Stability Score (CSS) is our patent-pending technology implemented in our analytics software SmartAnalytics. The CSS rates each call for its stability based on high-dimensional datasets covering hundreds of thousands of good and bad calls.

The CSS depends on the evolution in time of multiple features (i.e., a drop call is likely to be caused by some event that happened a few seconds ago in the past). Therefore, the input to the function that calculates the CSS is very high-dimensional as it contains the time series of multiple KPIs during a defined period. Fortunately, there are ML model architectures specifically designed to deal with sequences of features such as recurrent neural networks based on LSTM (Long Short-Term Memory) cells.

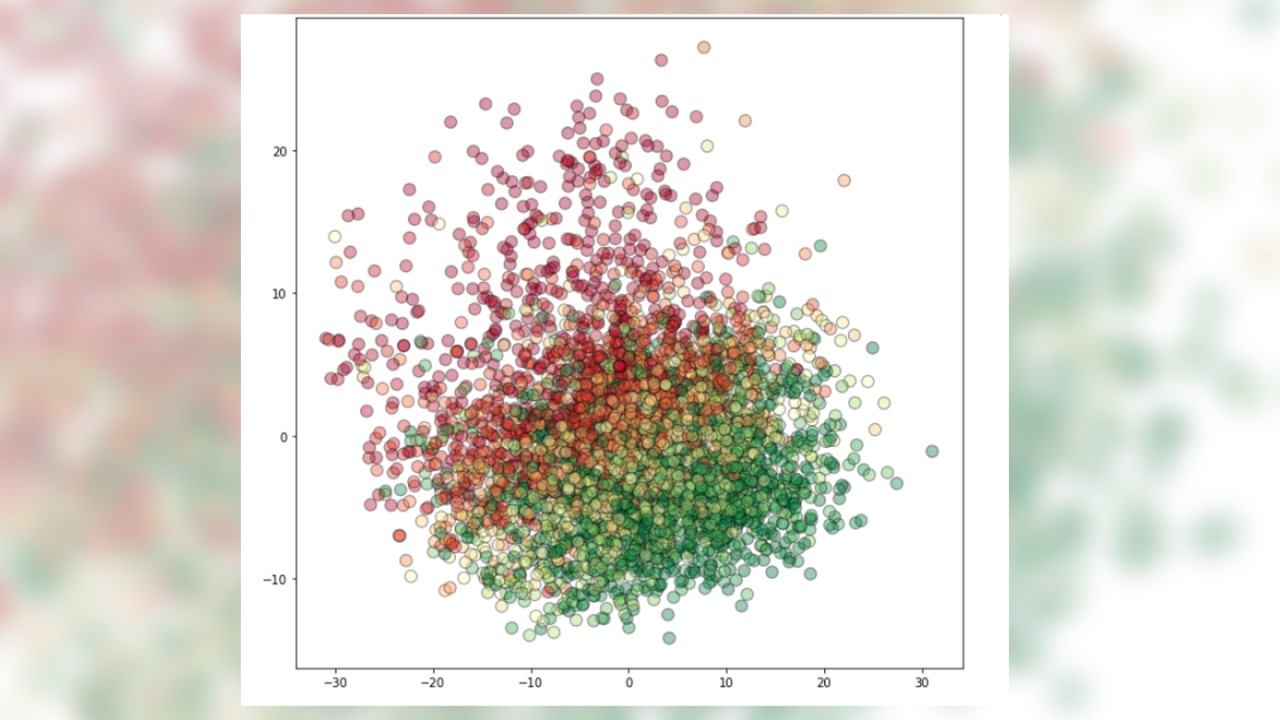

The figure shows a real plot of different call tests after being scored by our ML model. We provided a balanced set of drop (red dots) and non-drop (green dots) calls. The input feature space had been reduced to two dimensions using PCA (Principal Component Analysis) for visualization purposes.

After a lengthy training process, our ML model finds the boundary that separates drop from non-drop calls with a minimum margin of error. The boundary is a line in 2D as can be inferred from the figure, a plane in a similar 3D plot and a hyperplane in the number of dimensions of the real feature space. The hyperplane is the basis of the score that is obtained by measuring the Euclidean distance of a particular call to the learned hyperplane and scaling it to get a number between 0 and 1.

Operator benefits

The Call Stability Score (CSS) offers operators many benefits. One is the removal of one of the main chance factors in benchmarking campaigns. As the drop call events are very rare in today’s networks, a single extra drop call could cause an operator to lose or win the CDR comparison.

The CSS, however, transforms the extreme binary of drop or not drop states into a continuous score that rates the probability of a loss. An averaged CSS gives a very solid indication about the risk of drop calls with many fewer calls to make compared to traditional CDR. Also, the comparison is much fairer as it considers all calls, not only the very few that dropped.

The CSS enables us to guide users to potential risks and to point out optimization efforts in areas with low scores, even if there were no drop calls during the actual tests. Hence, we can anticipate issues that are usually undetected after a drive testing campaign.

The CSS is in the meantime also available to evaluate 5G voice calls (VoNR). It took time to get a significant amount of training data from 5G Standalone networks to train the algorithms for 5G calls.

Read the other parts of this series and learn about the role of machine learning in the telecom industry: